Apache Airflow is an open source platform that allows you to develop, schedule, and monitor batch oriented workflows. With its Python framework, you can easily connect workflows with various technologies. The web interface helps you manage the status of your workflows, and Airflow can be deployed in different ways, from a single process on your laptop to a distributed setup or self hosted like on Elestio.

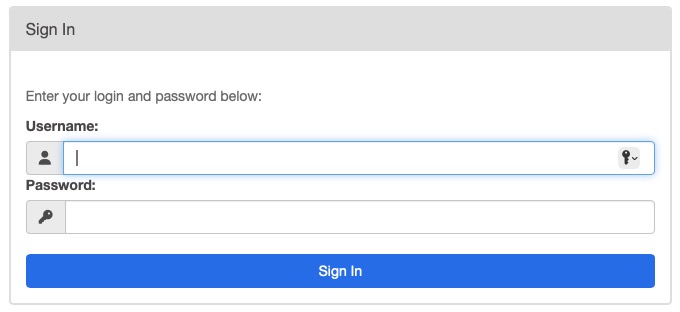

Sign In

On your first visit to the site, you will be presented with the login/signup screen.

When your instance is first created, an account is created for you with the email you chose. You can get the password for this account by going to your Elestio dashboard and clicking on the "Show Password" button.

Enter your email, name and password and click the "Sign In" button

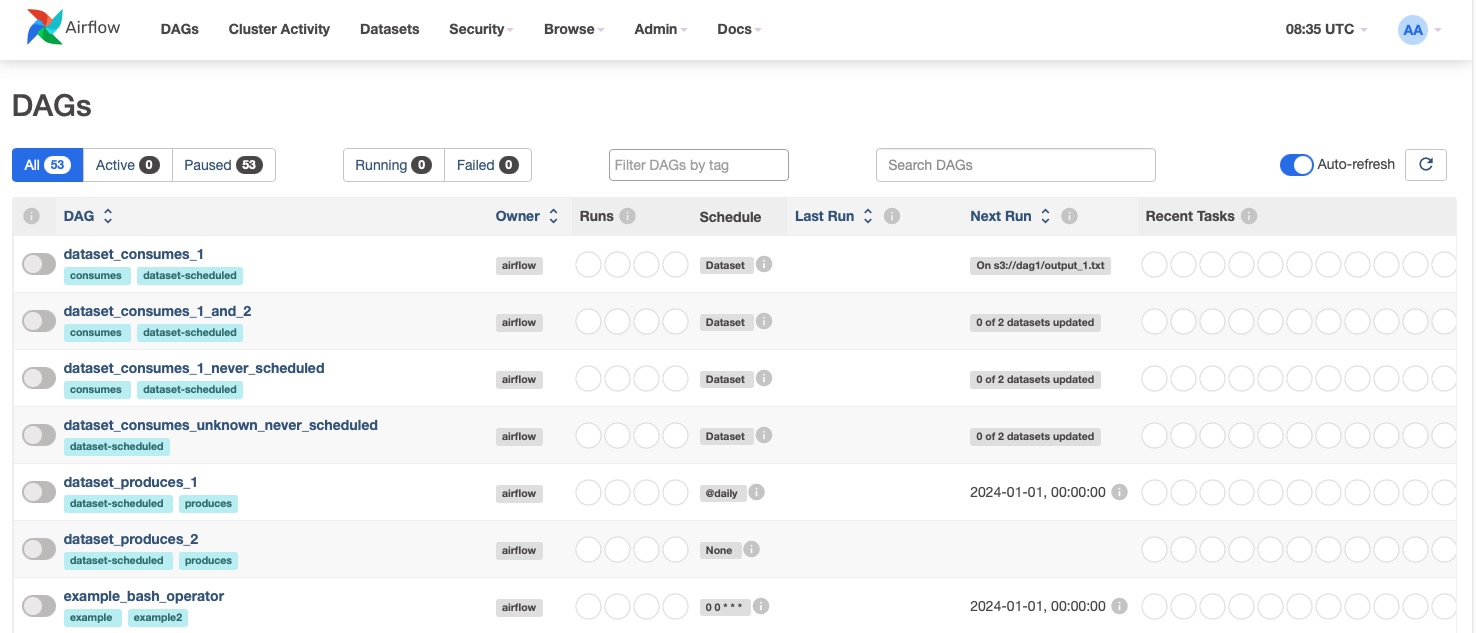

DAGs (Directed Acyclic Graphs)

In Airflow, a DAG (Directed Acyclic Graph) is a representation of tasks and their dependencies. It is defined in a Python script, allowing you to organize and manage the workflow structure as code. DAGs are crucial in Airflow as they provide a visual representation of the workflow and manage complex data pipelines. DAGs also enable you to schedule and monitor the execution of tasks, providing insights into the progress and status of the workflow. You can view these by clicking on the "DAGs" in the top menu.

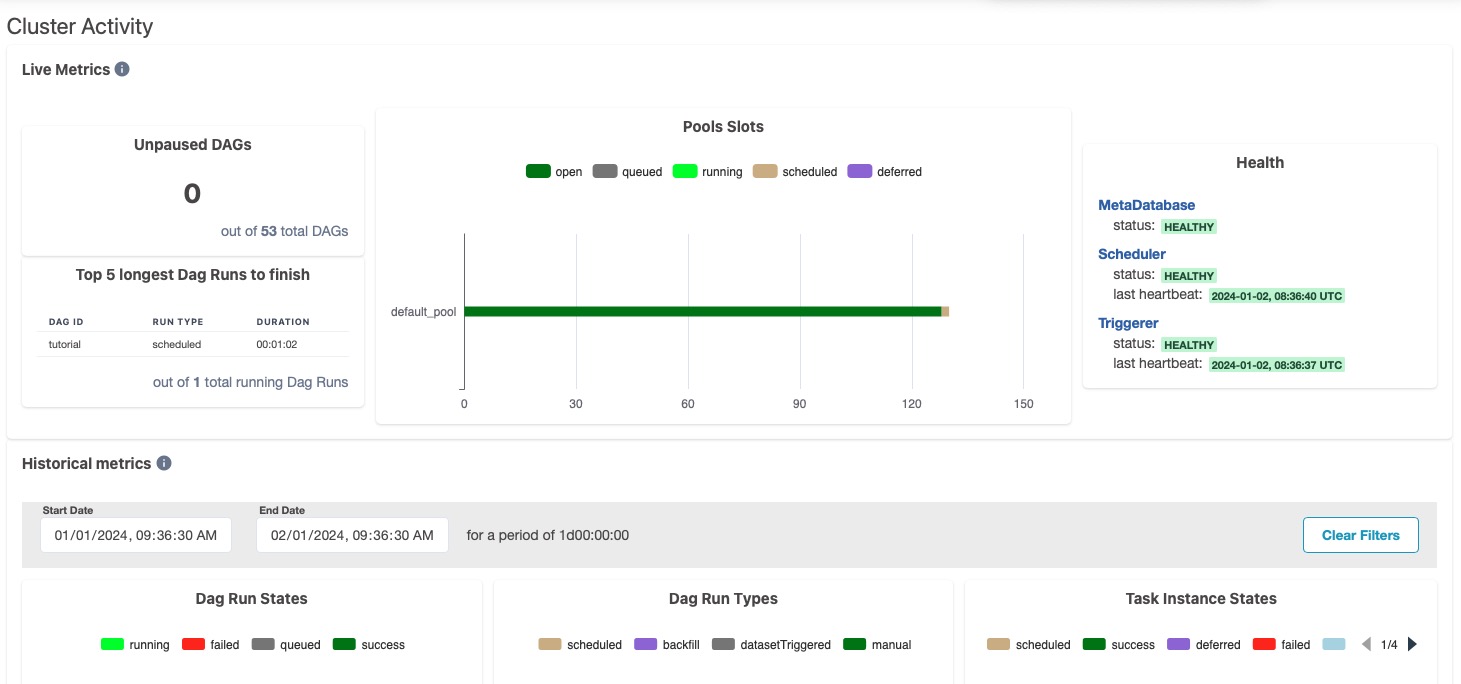

Cluster Activity

Cluster Activity is an important feature in Apache Airflow as it provides an overview of the cluster's health and performance. It displays information such as component health, DAG and task state counts, and more. This allows you to monitor the overall status of your workflows and identify any issues or bottlenecks in the system. By regularly checking the cluster activity, you can ensure that your workflows are running smoothly and efficiently.

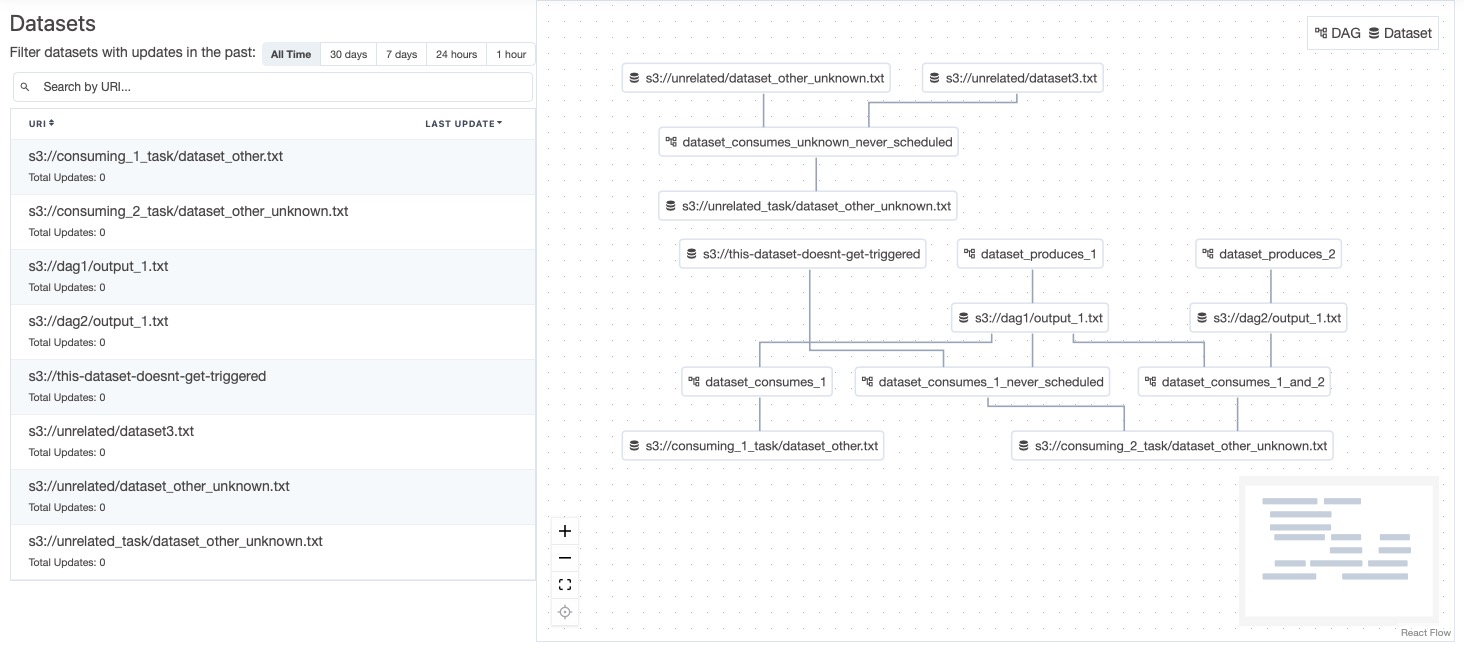

Datasets

In Airflow, datasets are a combined listing of the current data and are represented by a graph that illustrates how they are produced and consumed by DAGs. By clicking on any dataset in the list or the graph, you can highlight it along with its relationships. This action also filters the list to show the recent history of task instances that have updated the dataset and whether it has triggered further DAG runs.

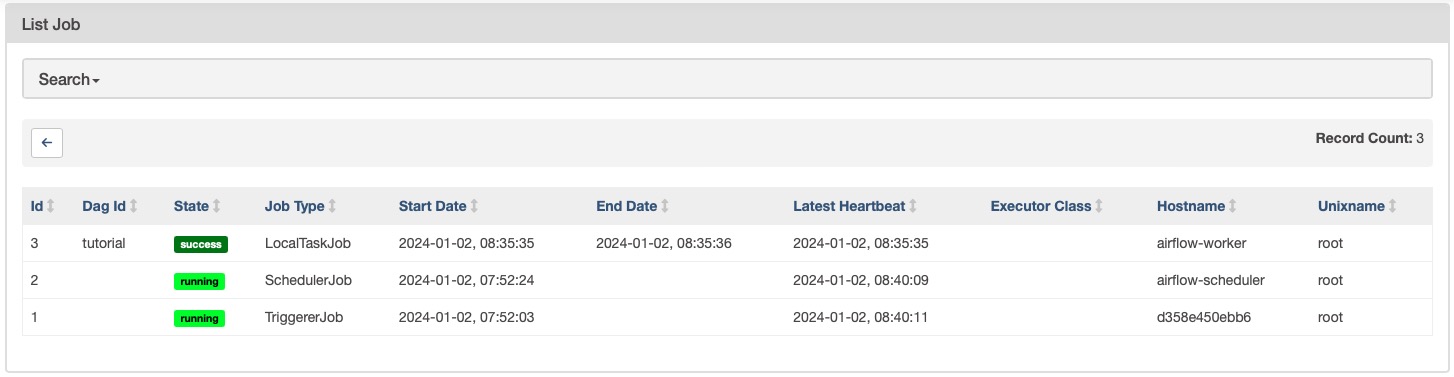

Listing Job

Airflow Jobs are specific instances of DAGs that are scheduled to run at a specific time. They are created when a DAG is scheduled to run and are deleted when the DAG is no longer scheduled. You can view the list of jobs by clicking on the "Browse > Jobs" in the top menu. You can see and search according to the job ID, DAG ID, job type, start date, end date, etc. Other options in browse consists audit logs, triggers, reschedules logs which provide additional insights into the jobs and dag runs.

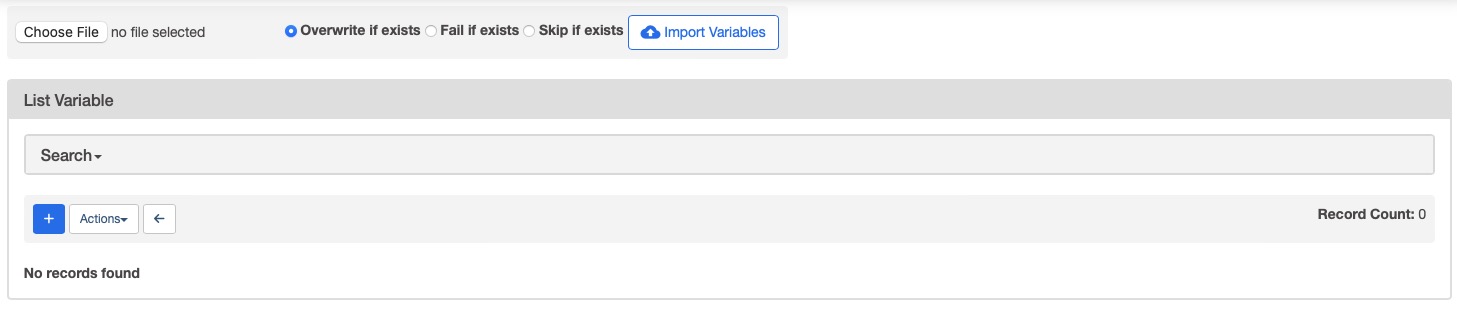

Variables view & Import

The variable view in Apache Airflow enables you to manage key-value pairs of variables used in jobs. You can list, create, edit, or delete variables. By default, the values of variables with keys containing certain sensitive words are hidden, but this can be configured to display the values in clear text. It is advised to mask the sensitive information like passwords before storing it in the variables. You can choose to import the list of variables by upload it in this view.

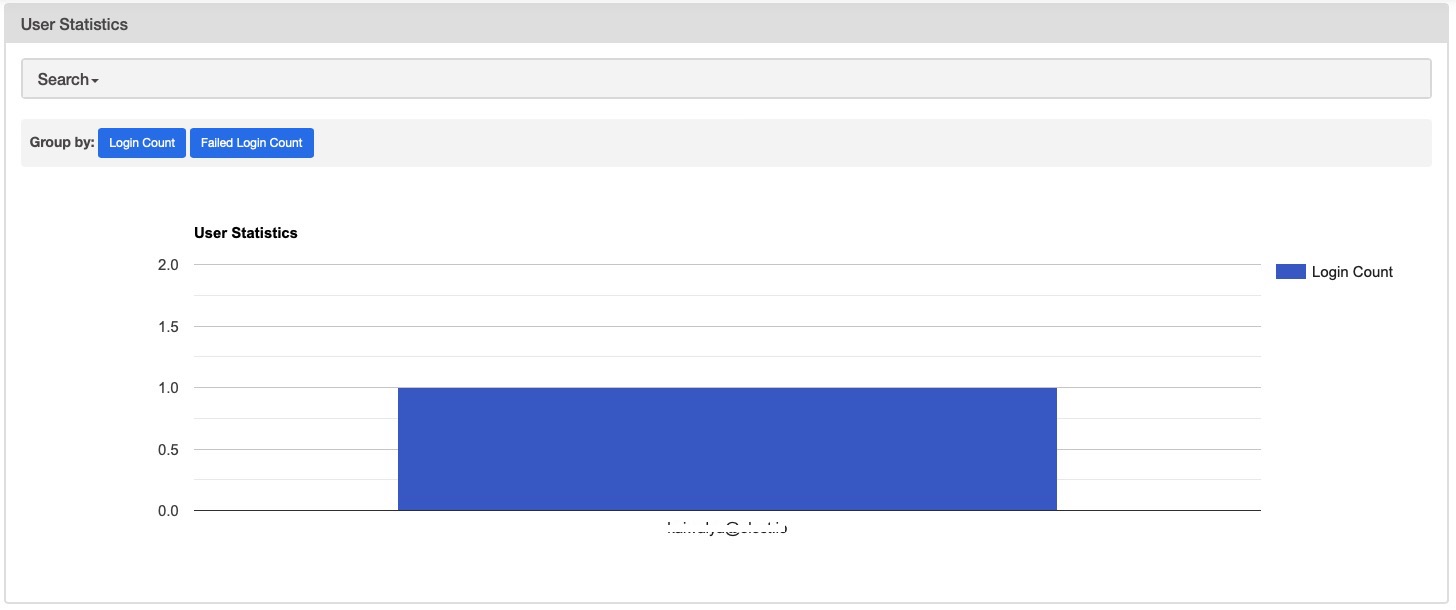

User Statistics & Security

Airflow allows you to view the statistics of users in the system. Admin can check information like log ins by the users, failed attempts, etc. This can be done by clicking on the "Security > User Statistics" in the top menu. Under security tab you can choose to list user, roles, permissions, etc and configure them as you wish.